For years, enterprises treated the cloud as the ultimate destination for computing. Centralized infrastructure promised scale, accessibility, and predictable cost. Data lakes grew endlessly, and AI models flourished in vast clusters of remote compute power. The future of AI may now bring the end of the cloud’s golden era.

The push to put everything on the cloud worked – until the laws of physics and economics caught up. Latency, bandwidth, privacy, and energy are now real constraints. Every byte that crosses a network consumes time, power, and money. As AI models grow larger and more complex, the cost of moving data often outweighs the value of processing it.

This is where the next chapter in the Future of AI begins. Intelligence is migrating from distant data centers to the physical world, bringing data closer to its source. The cloud was the foundation, but the Edge is becoming the next frontier.

Why the Future of AI Belongs to the Edge

Three forces are driving this shift: speed, efficiency, and trust.

- Speed defines real value. AI is only as useful as its timing. In environments like manufacturing, vehicles, or utilities, milliseconds matter. Sending data across continents for processing introduces lag. Edge computing eliminates that delay by bringing intelligence directly to where it’s needed.

- Efficiency is equally important. Cloud data centers, while powerful, are also energy-intensive. As AI workloads scale, power demand grows exponentially. Edge AI reduces energy use by performing inference locally, using hardware optimized for performance per watt.

- Trust closes the loop. Data privacy and sovereignty are now strategic priorities. Enterprises must keep sensitive data under local control to comply with laws and industry standards. Edge AI lets them analyze data safely at the source while maintaining compliance and operational independence.

The Future of AI depends on this new balance. Cloud for scale, edge for immediacy. Together, they form an adaptive, intelligent ecosystem.

Edge AI in Action: Real-World Transformation

The shift toward edge intelligence isn’t theoretical – it’s happening now.

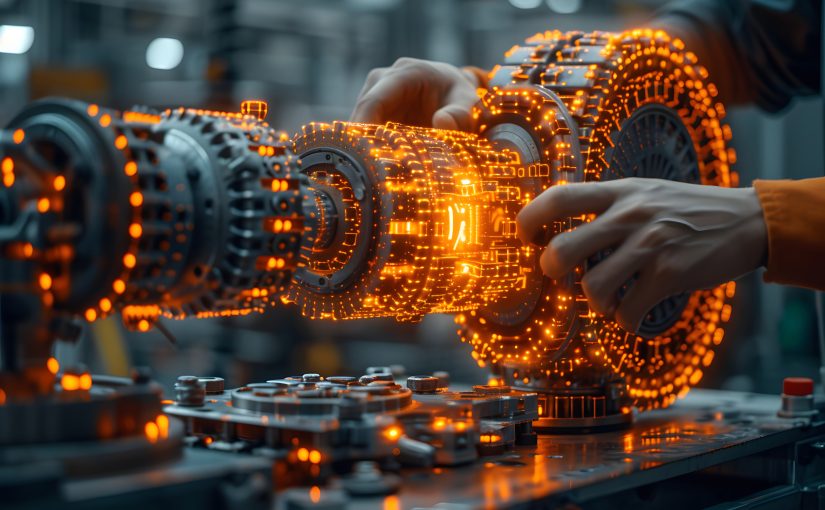

In manufacturing, predictive maintenance powered by local AI is becoming the norm. Embedded sensors analyze vibration, temperature, and acoustic data directly on machines. Instead of streaming massive datasets to the cloud, edge processors detect anomalies and forecast failures instantly. The result is higher uptime, less waste, and faster response.

In security and surveillance, edge AI is transforming how organizations monitor and protect their spaces. Smart cameras with onboard AI chips can detect patterns, unusual behavior, or threats in real time – without sending constant video streams to remote servers. This improves privacy, reduces bandwidth, and enables faster decisions. Smart cities already use this model to identify traffic incidents or safety risks immediately. Enterprises apply it to access control, intrusion detection, and facility monitoring with greater efficiency.

These examples reveal a key truth: the Future of AI lies in bringing intelligence to where data is born. Moving compute to the data makes AI faster, safer, and far more scalable.

Hardware Innovation Is Fueling the Future of AI

This evolution wouldn’t be possible without a revolution in hardware.

In my current work helping launch a new Edge AI semiconductor chip, I see firsthand how rapidly hardware is evolving. These processors are purpose-built for AI inference. They deliver high throughput with minimal power use, integrating computing and memory to minimize latency. The goal isn’t to replace the cloud. It’s to complement it.

These new chips act as “micro brains” distributed across networks of devices – cameras, robots, sensors, and vehicles. To make them effective, software and hardware must be co-designed. Models need to be optimized for each device’s constraints. Compilers and AI frameworks, such as TensorRT, OpenVINO, and ONNX Runtime, translate neural networks into efficient hardware instructions.

This co-evolution of silicon and software defines the Future of AI infrastructure. The two are no longer independent layers but an integrated system where each advances the other.

Why Enterprises Should Care Now

The edge isn’t coming. It’s already here. Enterprises that embrace distributed intelligence are moving faster, spending less, and managing risk better.

Consider the automotive industry. Vehicles now rely on sensors producing gigabytes of data every second. Cloud analysis alone can’t meet real-time demands. Edge AI enables local perception, navigation, and safety decisions.

The energy and telecom sectors follow similar logic. Local AI nodes can stabilize power grids and dynamically manage network traffic. Agriculture, logistics, and defense industries are next.

Forward-looking organizations are treating AI as a hybrid fabric: cloud for training and orchestration, edge for inference and action. This distributed model mirrors nature’s design – central brains paired with countless local reflexes. It’s how the Future of AI ecosystems will work.

Challenges on the Path to the Future of AI

Shifting intelligence to the edge comes with challenges. Model distribution across thousands of devices requires reliable orchestration and update pipelines. Security must be hardened since edge devices can be physically accessed. Encryption, secure boot, and hardware-based trust anchors are becoming baseline requirements.

Synchronization also matters. Insights generated locally must feed back into centralized analytics for model retraining and governance. Managing this data loop efficiently is a new enterprise discipline.

Finally, there’s a human challenge. Many teams were built for cloud-first systems. Edge AI demands expertise in embedded software, distributed networking, and hardware optimization. Bridging this skills gap will be a top priority for any organization serious about owning the Future of AI operations.

These barriers are real, but familiar. When cloud adoption began, many saw similar obstacles around governance, security, and cost. The companies that adapted the fastest became market leaders. The same will be true again.

A New Balance Between Cloud and Edge

The coming years will not be about choosing cloud or edge. Instead, it will be about balance. The Future of AI architecture is a collaboration between centralized intelligence and distributed autonomy.

The cloud will remain critical for model training, orchestration, and long-term analytics. The edge will dominate when real-time context and decision-making are required. Industry leaders will design systems where both layers can cooperate, creating a continuous feedback loop of data, learning, and optimization.

Enterprises that achieve this harmony will not just keep up. They’ll deliver faster responses, smarter automation, and better user experiences while controlling cost and risk.

Final Thoughts

AI will soon no longer live solely in data centers. It will live everywhere – inside machines, vehicles, sensors, and devices. The cloud was the first revolution in distributed computing. The edge is the next.

This isn’t a retreat from the cloud but an evolution beyond it. As I’ve seen while working on Edge AI silicon, the convergence of hardware and software is accelerating. (See my prior article, Will AI Shatter the Old Walls Between Software and Hardware?). We’re entering an age where intelligence is ambient, embedded in the systems and environments around us.

For enterprises, the takeaway is simple. Don’t wait for the edge to arrive. It’s already here. The Future of AI will belong to those who recognize that the next wave of innovation won’t rise from the cloud – it will rise from the edge.

Keep an open mind. The next frontier is closer than you think!